SWE 622, Spring 2017, Homework 4, due 4/12/2017 4:00pm.

Introduction

Throughout the semester, you will build a sizable distributed system: a distributed in-memory filesystem. The goal of this project is to give you significant hands-on experience with building distributed systems, along with a tangible project that you can describe to future employers to demonstrate your (now considerable) distributed software engineering experience. We’ll do this in pieces, with a new assignment every two weeks that builds on the prior. You’re going to create an actual filesystem – so when you run CFS, it will mount a new filesystem on your computer (as if you are inserting a new hard disk), except that the contents of that filesystem are defined dynamically, by CFS (rather than being stored on a hard disk).

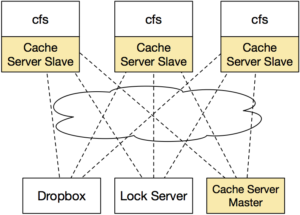

While we’re not focusing on making this a high-throughput system (for simplicity, we are implementing the filesystem driver in Java, which will inherently add some sizable performance penalty compared to implementing it in native code), you should be able to see the application of our system on the real world: often times, we build services that need to share data. If the total amount of data that we need to store is large, then we’ll probably set up some service specifically devoted to storing that data (rather than storing it all on the same machines that are performing the computation). For performance, we’ll probably want to do some caching on our clients to ensure that we don’t have to keep fetching data from the storage layer. Once we think about this caching layer a little more, it becomes somewhat more tricky in terms of managing consistency, and that’s where a lot of the fun in this project will come in. Here’s a high-level overview of the system that we are building:

Each computer that is interested in using the filesystem will run its own copy of the filesystem driver (we’ll call it the CloudFS, or CFS for short). We’ll use Dropbox’s web API to provide the permanent storage, and will implement a cache of our Dropbox contents in our cfs driver. A lock service will make sure that only a single client writes to a file at a time (HW2). Over the course of the semester, we’ll set up the cache to be distributed among multiple CFS clients (HW3), distribute the lock server between the clients to increase fault tolerance (HW4), investigate fault recovery (HW5), and security and auditing (HW6).

Acknowledgements

Our CloudFS is largely inspired by the yfs lab series from MIT’s 6.824 course, developed by Robert Morris, Frans Kaashoek and the 6.824 staff.

Homework 4 Overview

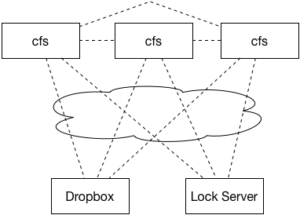

We’re going to make a slight change to our system model of CFS for this assignment. In this assignment, we’re going to expand CFS so that we can improve the performance of the cache and continue towards fault tolerance. This assignment, our architecture will look something like this:

In this assignment, you will completely discard your existing lock server implementation and implement a new system that accomplishes the same tasks (locking files, maintaining a count of active CFS clients) using ZooKeeper.

There will be two high level tasks for this assignment:

- Tracking group membership using ZooKeeper (instead of your heartbeat protocol)

- Tracking lock ownership using ZooKeeper

Your implementation of Homework 4 should build directly on your successful Homework 3 implementation. As in HW3, you will not be penalized again for (non-crashing) bugs that you had in your previous implementations. Which is to say: if you didn’t implement the WAIT stuff correctly in HW3, that won’t come back to bite you. HOWEVER, this does not change the fact that basic filesystem operations should still work “correctly” for a single client (e.g. not crash, mkdir should still make a new directory in most cases, etc.). If you have any questions on this topic please ask me directly.

This assignment totals 80 points, and will correspond to 8% of your final grade for this course.

Getting Started

Start out by importing the HW4 GitHub repository. Then, clone your private repository on your machine, and import it to your ide. Copy your CFS client from HW3 into your HW4 project. You may choose to start your HW4 CloudProvider from scratch, or build directly upon what you had in HW3. If you copy your RedisCacheProvider from HW3, please note that the constructor has changed for HW4 to set up the ZooKeeper connection.

You’ll notice there are now several projects in the repository:

-

fusedriver– Your CFS driver, just like in HW3 -

lock-server– The Lock server code. You should not change anything in this directory. The semantics for the lock server will be implemented by each client, on top of ZooKeeper. The lock server exists to create a cluster of ZooKeeper servers for your CFS clients to connect to.

You’ll also find a Vagrantfile in the repository, similar to the configuration used for HW3, including Redis. The first time you start it, it will install the correct version of Redis. If it fails for any reason (for instance, network connectivity issues), try to run vagrant provision to have it re-try.

At a high level, your task is to modify your edu.gmu.swe622.cloud.RedisCacheProvider so that: (1) it reports its liveness to the ZooKeeper cluster and uses the ZooKeeper group membership information to correctly call the Redis WAIT function and (2) uses ZooKeeper to implement locking. Note that for this assignment, you will be graded on all error handling aspects of your ZooKeeper interactions (that is, prepare for the possibility that a ZooKeeper operation might not succeed).

Additional details are provided below.

Tips

You should copy your config.properties over from HW3.

You can run the lock server in the VM, by running:

|

1

2

|

vagrant@precise64:/vagrant$ mvn package

vagrant@precise64:/vagrant$ java -jar lock-server/target/CloudFS-lock-server-hw4-0.0.1-SNAPSHOT.jar

|

And, you can run the CFS client:

|

1 |

vagrant@precise64:/vagrant$ java -jar fusedriver/target/CloudFS-hw4-0.0.1-SNAPSHOT.jar -mnt cfsmnt

|

The first time you run it, you’ll need to re-authorize it for Dropbox (the config files that authorized it before were local to the HW1,2,3 VM).

If you want to remove what’s stored in Redis, the easiest way is using the FLUSHALL command in redis-cli:

|

1

2

3

4

|

vagrant@precise64:/vagrant$ redis-cli

redis 127.0.0.1:6379> FLUSHALL

OK

redis 127.0.0.1:6379> QUIT

|

Debugging remote JVMs

You can launch a Java process and have it wait for your Eclipse debugger to connect to it by adding this to the command line:

|

1 |

-Xdebug -Xrunjdwp:transport=dt_socket,server=y,address=5005

|

After you make this change, disconnect from the VM if you are connected, then do “vagrant reload” before “vagrant ssh” to apply your changes.

Then, in Eclipse, select Debug… Debug configurations, then “Remote Java Application”, then “create new debug configuration.” Select the appropriate project, then “Connection Type” => “Standard (socket attach)”, “Host” => “localhost” and “port” => 5005 (if you are NOT using Vagrant) or 5006 (if you ARE using Vagrant).

Testing

A good way to test this assignment will be to have multiple instances of CFS running, each mounting your filesystem to a different place. Then, you can observe if writes appear correctly across the clients.

Dealing with ZooKeeper

ZooKeeper is set up to not persist any of its state to disk. That is, if you kill the ZooKeeper server and then restart it, it will be empty. This is done to make it easier for you to test: if you accidentally take out locks on everything, then your client crashes, it’s annoying to clean everything up. This way, if you ever end up in an inconsistent state, you can always stop the ZooKeeper server and restart it (bringing it back to a clean state). Of course, this means that the ZooKeeper is not very fault tolerant – but is just a configuration option, that’s easily changed if we wanted to make it so.

Remember to make sure that you close your ZooKeeper session before your CFS client terminates! This will allow for automatic cleanup of things like locks and group membership. A good way to do it is by adding this code early on, for instance in your main function:

|

1

2

3

4

5

6

7

|

Runtime.getRuntime().addShutdownHook(new Thread(new Runnable() {

@Override

public void run() {

curator.close();

}

}));

|

Part 1: Group Membership (40 points)

Recall that our goal from HW3 for Redis replication was to be to make a best-effort attempt to make sure that every write gets to every replica. To do so, we need to know how many replicas there are at any given point. We implemented a heartbeat protocol to track client liveness, using our lock server to track client state. This wasn’t great, because if the lock server failed, the entire system would become unavailable. Now, you will use ZooKeeper to track client liveness, specifically, using Apache Curator’s Group Membership recipe. You will find that the RedisCacheClient is already configured to create a curator connection to a ZooKeeper cluster that is run by the lock server.

Note the interface for Curator’s group membership:

|

1

2

3

4

5

6

7

8

9

10

11

12

|

public GroupMember(CuratorFramework client, String membershipPath, String thisId, byte[] payload)

Parameters:

client - client instance

membershipPath - the path to use for membership

thisId - ID of this group member. MUST be unique for the group

payload - the payload to write in our member node

group.start();

group.close();

group.getCurrentMembers();

|

ZooKeeper/Curator will automatically handle keeping track of what nodes are still in the group when they disconnect. Note that GroupMember tracks an ID for each client (“thisID”). This ID must be unique for the CFS process that’s interacting with ZooKeeper. Use a mechanism from Curator/ZooKeeper to generate unique IDs (like a SharedCounter).

Update your WAIT implementation from HW3 to use ZooKeeper to know the number of active Redis peers to wait for.

Make sure to handle various error conditions, which might include:

- Inability to join the group

- Inability to leave the group

- Inability to get the number of members of the group

In these cases, it is probably wise to throw an IOException() which will then get propagated back to the process trying to use your filesystem.

Part 2: Distributed Locks (40 points)

Change your RedisCacheProvider to use ZooKeeper to acquire and release locks. For HW4, please consider the following locking semantics:

unlink: Acquire a write-lock on the file

put: Acquire a write-lock on the file

get: Acquire a read-lock on the file

Note the simplification from the past – there is no need to consider directory locking. When locking a file, there is no need to consider locking its parents. The only calls that you need to use ZooKeeper for locking on are put, unlink and get, and they should only acquire a lock on the specific file being accessed.

Be careful to handle all error cases related to ZooKeeper, including but not limited to:

- Inability to create a lock

- Inability to take a lock

- Inability to release a lock

Submission

Perform all of your work in your homework-4 git repository. Commit and push your assignment. Once you are ready to submit, create a release, tagged “hw4.” Unless you want to submit a different version of your code, leave it at “master” to release the most recent code that you’ve pushed to GitHub. Make sure that your name is specified somewhere in the release notes. The time that you create your release will be the time used to judge that you have submitted by the deadline. This is NOT the time that you push your code.

Make sure that your released code includes all of your files and builds properly. You can do this by clicking to download the archive, and inspecting/trying to build it on your own machine/vm. There is no need to submit binaries/jar files (the target/ directory is purposely ignored from git). It is accepted (and normal) to NOT include the config.properties file in your release.

If you want to resubmit before the deadline: Simply create a new release, we will automatically use the last release you published. If you submitted before the deadline, then decide to make a new release within 24 hours of the deadline, we will automatically grade your late submission, and subtract 10%. Any releases created more than 24 hours past the deadline will be ignored.