CS475 Final Project, due 12/7/19, 9:00pm, NO LATE SUBMISSIONS ACCEPTED

Start by downloading the handout

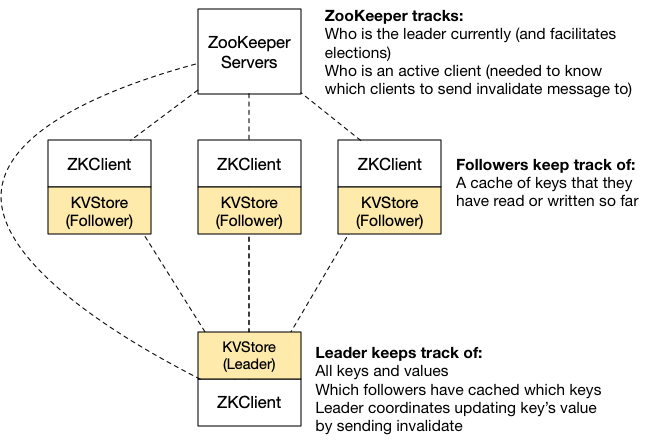

In this final project, you will create a consistent, fault-tolerant distributed key/value store using ZooKeeper. The design of this system has the following goals in mind:

- Consistency: Key/value pairs can be created by any node. Any node can query the system to find the current value of a key. Replication will be sequentially consistent: no node will be able to read an out-of-date value.

- Fault tolerance: The system overall will tolerate a simultaneous failure of a minority of nodes. Any data that was held only by those nodes will be lost, but the system will otherwise continue to function.

- Partition awareness: If a participant becomes aware that it is in a minority partition, it will cease to operate and abort any pending operations.

Due to the added technical complexity of this project (including its use of a new-to-you technology, ZooKeeper), you may complete this project individually, or in groups up to size 3 (e.g. you, with 2 other team-mates). We are also trying something new with the test cases for this assignment: we are distributing some of the core test cases to you, but a significant portion of them will not be visible to you, and will be only on AutoLab. You will be strictly limited to 10 submissions on AutoLab, so you are very strongly encouraged to (1) find one/two team mates, and (2) reason through all of the possible failure modes of your clients so that you can get the assignment correct, even without repeatedly running all of the tests.

You implemented a distributed key-value store in Homework 4. The design of Homework 4’s key-value store did not meet the goals outlined above: if a single replica failed, then all writes might be blocked (since the server, which was coordinating the writes, wouldn’t be able to receive a response from a replica). Moreover, if the server failed, then the entire game was over: there would be no way for the remaining replicas to pick up the pieces and continue operating.

Homework 4 consisted of a key-value store that featured transactions. For this final project, you will not need to worry about transactions, but instead will focus on fault tolerance. For this project, you’ll implement a distributed key-value store with a rotating leader that is in charge of coordinating writes. The primary reason why we have designated a single node as the lock server in the past is because it eliminates the need for all of our replicas to come to some mutual agreement between each other of who the lock server (leader) is. Now, we will allow any replica to be the leader, and use ZooKeeper to perform this coordination between replicas so that they can establish which is the leader at any given point. Of course, if two replicas were to simultaneously consider themselves leader, then this would be bad, since each would assume it had free rein to perform updates.

The primary role of the leader is to keep track of (1) all of the keys and their values and (2) which nodes have a copy of each key. When a key’s value is updated, the leader will notify all clients that have a copy of that key to invalidate their cache.

Your key-value store will expose just two simple operations to the end-user:

- getValue(String key)

- setValue(String key, String value)

Overview

Reading values:

To read a key K, if node N currently has the value for that key cached (e.g. from previously reading it or writing it), then it will directly return it, without contacting any other node. Otherwise: To read a key K, a node N will contact the leader and retrieve the value. If the key is unknown to the leader, it will return null. Clients must not cache null values. If the key is known to the leader, it will record the fact that node N now has a cached copy of this key’s value.

If the node is disconnected from ZooKeeper: If node N does not currently hold a live ZooKeeper session (e.g. it timed out), then it will throw an IOException for any read operation. It is OK to return a stale, cached value if node N is disconnected from ZooKeeper but has not detected that disconnection yet.

If the node is not able to reach the leader and the leader is disconnected from ZooKeeper: If node N is unable to contact the leader but does currently hold a live ZooKeeper session (and the leader does not have a live ZooKeeper session), then it will first participate in the leader election described below, and then complete the read as described above.

If the node is not able to reach the leader, and the node does not have the value cached, but the leader is still active in ZooKeeper: If node N is unable to contact the leader, but ZooKeeper indicates that the leader and client are both still active in ZooKeeper, then the client must wait for the leader to become available (this is the default RMI behavior when an RMI endpoint is not reachable). You do not have to consider the case of the leader being available on ZooKeeper at the start of an operation, and then disconnecting from ZooKeeper while an operation is in progress. If you have the value cached, you can serve it directly.

Writing values

To write a key K, node N will ask the leader to update the value. The leader will:

- Notify all clients to invalidate their cache, and clear its list of clients which have this key cached

- Update the value

- Add node N to the (now emptied) list of clients with this key cached

It is very important that only one write to a key can occur at a time, and no reads can occur during a write.

If the node that wants to write the value is disconnected from ZooKeeper: If node N does not currently hold a live ZooKeeper session (e.g. it timed out), then it will throw an IOException for any write operation.

If the leader is disconnected from the client: If node N is not able to contact the leader, but does currently hold a live ZooKeeper session (and the leader does not), then it will first participate in a leader election described below, and then complete the write as described above.

If the node is able to reach the leader but the leader is disconnected from ZooKeeper: The leader must not begin any writes until it validates that it holds a valid ZooKeeper session. If it finds it does not hold a valid ZooKeeper session, it must throw an IOException

If the leader is unable to contact a client with a cached version of that key: The leader must wait until all invalidate messages are acknowledged. However, if a client becomes disconnected from ZooKeeper, and the leader detects this, it should ignore the failure of the invalidate message (since ZooKeeper agrees that that node has failed). You do not have to consider the case of the client being available on ZooKeeper at the start of an operation, and then disconnecting from ZooKeeper while an invalidate is in progress.

If the node is not able to reach the leader, but the leader is still active in ZooKeeper: If node N is unable to contact the leader, but ZooKeeper indicates that the leader and client are both still active in ZooKeeper, then the client must wait for the leader to become available (this is the default RMI behavior when an RMI endpoint is not reachable). You do not have to consider the case of the leader being available on ZooKeeper at the start of an operation, and then disconnecting from ZooKeeper while an operation is in progress.

Tracking node membership

Key to the successful operation of your key-value store will be a global, shared understanding of which nodes are currently alive and connected to the system. Otherwise, reads and writes might become stalled by partitioned or crashed nodes. You’ll use Curator’s PersistentNode abstraction to track which nodes are currently active participants.

Leader election

If there is currently no leader (e.g. when the system starts up, or when the leader becomes disconnected), then any nodes who can still contact a quorum of ZooKeeper nodes will participate in a leader election. You will use Curator’s LeaderLatch to perform the election.

Leader initialization

If this is the first leader, then the leader won’t need to do anything to initialize itself – there will be no data at the start, and hence no nodes will have any copies of any values, and no nodes will currently hold any ownership of any keys. However, if this is a leader being promoted after a prior leader failed or was disconnected, then it will simply initialize itself using whatever cached data it had at the time it was promoted. Hence, some key/value pairs might be lost (if only the leader had them). When a node detects that the leader has changed (and that it is not the leader), it must flush its entire cache.

Disconnection and Reconnection

When a node is reconnected to a quorum of ZooKeepers, it will check to see how many other nodes are there. If there are none, it will perform the leader election described above and then assume a leader role, and can initialize itself using any cached data that it has. If, however, there are other nodes present at the moment that it reconnects (regardless of who the leader is/was), the node will flush its entire local cache, including all values and cache information.

General Instructions

We have provided you a baseline implementation of the Key/Value server that handles all user interaction, creates a ZooKeeper server and connects to it, but does not do anything (that is, you will not need to implement any UI or command processors; you will not need to add much RMI boilerplate). You may not include any additional libraries (e.g. download and and require additional JARs), although feel free to use any of the various libraries included with Java 8 or already included by the starter project.

You must use exclusively reentrant locks (e.g. synchronized or ReentrantReadWriteLock): no StampedLocks.

Your KV Store will be compiled and tested using apache maven, which automatically executes the provided JUnit tests. Please install Maven on your computer. Unfortunately, Maven is not installed on Zeus, however you can download the most recent version (e.g. apache-maven-3.6.1-bin.zip) and unzip it in your home directory. Then, to run maven, type ~/apache-maven-3.6.1/bin/mvn in the snippets below (instead of just mvn). Note that you can easily import maven projects into eclipse and IntelliJ (we suggest IntelliJ over eclipse).

To compile and run your shell, run mvn package in the top level directory and then run java -jar target/kvstore-2019.3.5-SNAPSHOT.jar to start the KV server. Just run the jar once: it will create a ZooKeeper and a client. To create more clients, use the new-client command. You’ll notice that the text-mode interface we’ve provided for you has a handy help command. Since this single CLI will be a front-end for multiple clients, you’ll need to include the client ID in each command, e.g. get 0 foo will issue the get command to client . put 1 foo bar will invoke setValue on client 1. We’ve also provided commands that you can use to simulate a service failing: either disabling a client’s connection to ZooKeeper, or blocking other clients from talking to it over RMI.

|

1

2

3

4

5

6

7

8

9

10

11

|

cs475sh:>help

AVAILABLE COMMANDS

get: Get a key

list: List clients

new-client: Create a new client

put: Set a key

rmi-down: Disable a client's inbound RMI services

rmi-up: Resume a client's inbound RMI services

zk-down: Disable a client's access to ZooKeeper

zk-up: Resume a client's access to ZooKeeper

|

To build the jar file without running the tests, run mvn -DskipTests package.

Your assignment will be automatically graded for correctness (note that there will be a manual grading phase to check hard-to-automatically-catch and concurrency issues). Included with your handout is part of the test script that we will use to grade your assignment. Upon submitting your assignment, our server will automatically compile and test your assignment and provide you with test results. You can resubmit only ten times until the deadline. To run the portion of the tests that we are providing you with, simply execute mvn test (of course, if you do this first off, you’ll see that they all fail!)

Note: Your code must compile and run on the autograder, under Java 8. It is unlikely that you will have any difficulties developing on a Mac, Linux or Windows, but please keep in mind the possibility of portability problems. When you feel satisfied with implementing one phase of the assignment, submit to AutoLab and verify that AutoLab agrees.

General coding/grading requirements:

- You must use exclusively reentrant locks (e.g. synchronized or ReentrantReadWriteLock).

- You should feel free to add whatever additional classes you wish, or any additional methods to the existing edu.gmu.cs475.KVStore. You must not modify the edu.gmu.cs475.internal.IKVStore interface, the edu.gmu.cs475.AbstractKVStore, any of the tests, or any of the internal classes.

- Your code should be thread-safe: concurrent calls to any of these methods (or any other method in KVStore) should not have any races. It should now be clearer how this can occur — you will potentially have multiple clients attempting to interact with the server simultaneously.

- You must not store any state in static fields

Part 1: ZooKeeper Setup (20/150 points)

Your KVServer will automatically get passed a Curator (ZooKeeper) client object. It’s up to you to manage that connection. To satisfy the requirements above, you’ll need to

- Create an Ephemeral, PersistentNode to represent your KVServer in the pool of all active servers

- Create a LeaderLatch to elect and maintain a leader of the group. The best way to find out who the leader is is to call getLeader() on your LeaderLatch – not using the LeaderLatchListener, since the LeaderLatchListener will notify you only if you become the leader or no longer are the leader – it will not tell you if you are not the leader, and the leader changes.

Your first entry point will be initClient:

|

1

2

3

4

5

6

7

8

9

10

11

12

|

/**

* This callback is invoked once your client has started up and published an RMI endpoint.

*

* In this callback, you will need to set-up your ZooKeeper connections, and then publish your

* RMI endpoint into ZooKeeper (publishing the hostname and port)

*

* You will also need to set up any listeners to track ZooKeeper events

*

* @param localClientHostname Your client's hostname, which other clients will use to contact you

* @param localClientPort Your client's port number, which other clients will use to contact you

*/

public void initClient(String localClientHostname, int localClientPort);

|

You should use the ZooKeeper client zk, should create your ephemeral node at the path ZK_MEMBERSHIP_NODE + "/" + getLocalConnectString(). You should create your LeaderLatch at the path ZK_LEADER_NODE. Make sure to specify the id of your LeaderLatch to identify it (in the constructor), specifically passing getLocalConnectionString() as the id.

You can detect when your client becomes connected to and disconnected from ZooKeeper in the following callback:

|

1

2

3

4

5

6

7

8

|

/**

* Called when there is a state change in the connection

*

* @param client the client

* @param newState the new state

*/

@Override

public void stateChanged(CuratorFramework client, ConnectionState newState)

|

Frequently asked question: Why can’t we just use the leaderLatch to keep track of all of the connected clients? Unfortunately, the Curator API does not guarantee that the list of participants in the leaderLatch is updated (it is cached, and refreshed at arbitrary intervals).

Warning: If you want to clean up these resources (e.g. call close, in the _cleanup method), make certain that you only call close if you are actually connected to ZooKeeper, otherwise you will see timeouts!

Grading:

- 16pts JUnit tests (8 pts x 2 tests)

- 4pts Manual inspection

Part 2: Key-Value Server with no failover (80/150 points)

Implement the external API (which is used by the text interface and the tests) as well as the peer-to-peer API that is used by non-leaders to invoke functions on the leader, and by the leader to invoke functions on the followers. You should use the connectToKVStore method (in AbstractKVStore) to connect from one client to another (e.g. to the leader or to a follower). The parameter that you pass to it is the same value that gets returned from getLocalConnectString().

The client-facing API:

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

|

/**

* This callback is invoked once your client has started up and published an RMI endpoint.

*

* In this callback, you will need to set-up your ZooKeeper connections, and then publish your

* RMI endpoint into ZooKeeper (publishing the hostname and port)

*

* You will also need to set up any listeners to track ZooKeeper events

*

* @param localClientHostname Your client's hostname, which other clients will use to contact you

* @param localClientPort Your client's port number, which other clients will use to contact you

*/

public abstract void initClient(String localClientHostname, int localClientPort);

/**

* Retrieve the value of a key

* @param key

* @return The value of the key or null if there is no such key

* @throws IOException if this client or the leader is disconnected from ZooKeeper

*/

public abstract String getValue(String key) throws IOException;

/**

* Update the value of a key. After updating the value, this new value will be locally cached.

*

* @param key

* @param value

* @throws IOException if this client or the leader is disconnected from ZooKeeper

*/

public abstract void setValue(String key, String value) throws IOException;

|

To communicate between nodes, you’ll implement the following RMI-based API:

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

|

/**

* Request the value of a key. The node requesting this value is expected to cache it for subsequent reads.

*

* This command should ONLY be called as a request to the leader.

*

* @param key The key requested

* @param fromID The ID of the client making the request (as returned by AbstractKVStore.getLocalConnectString())

* @return The value of the key, or null if there is no value for this key

*

* DOES NOT throw any exceptions (the RemoteException is thrown by RMI if the connection fails)

*/

public String getValue(String key, String fromID) throws RemoteException;

/**

* Request that the value of a key is updated. The node requesting this update is expected to cache it for subsequent reads.

*

* This command should ONLY be called as a request to the leader.

*

* @param key The key to update

* @param value The new value

* @param fromID The ID of the client making the request (as returned by AbstractKVStore.getLocalConnectString())

*

* DOES NOT throw any exceptions (the RemoteException is thrown by RMI if the connection fails)

*/

public void setValue(String key, String value, String fromID) throws RemoteException;

/**

* Instruct a node to invalidate any cache of the specified key.

*

* This method is called BY the LEADER, targeting each of the clients that has cached this key.

*

* @param key key to invalidate

*

* DOES NOT throw any exceptions (the RemoteException is thrown by RMI if the connection fails)

*/

public void invalidateKey(String key) throws RemoteException;

|

It is very important that you consider concurrency here: multiple clients might be attempting to write the same or different keys concurrently. A single client might be receiving multiple invalidate requests simultaneously. Your grade for part 2 will not consider any cases where a leader or client fails.

Reminder: When sending invalidate messages, the leader must send these messages to ALL clients with the key cached – even if that includes the very client proposing to update the key!

Grading:

- 60 points JUnit tests (6 pts x 10 tests)

- 20 points concurrency

Part 3: Fault Tolerance (50/150 points)

Implement the fail-over protocol described in the project summary above. For each method, consider “what would happen if I was disconnected from ZooKeeper at this point?” Consider: “what would happen if the leader stopped responding?” or “what would happen if a follower stopped responding?” If the system behavior in a specific failure is unclear to you after reading the summary above, then please post a specific question on Piazza.

Your fault-tolerance handling will be graded primarily by a battery of JUnit tests, which you will not have access to. However, every time that you submit your assignment on AutoLab, they will run. Hence, you are very strongly encouraged to reason through the failure cases and your code’s response to them, and test different failure modes (using the text interface, you can simulate ZooKeeper disconnecting from a client, or a client ceasing to respond to RMI messages. You are certainly not required to write actual JUnit tests, but should feel free to do so if you think it will be helpful; we’ve provided one such sample test in your handout. Note 11/21: Please do not try to use the blockedUntilNotLeader method – it is not needed for the test scenarios, and is not correctly implemented. You should feel free to implement such functionality yourself though, if you wish.

Grading:

- 42 points JUnit tests (6 pts x 7 tests)

- 8 points manual

Grading

Your assignment will be graded on a series of functional tests, making sure that it implements the specification above.

Hand In Instructions

You must turn in your assignment using Autolab (You MUST be on the campus network, or connected to the GMU VPN to connect to Autolab). If you did not receive a confirmation email from Autolab to set a password, enter your @gmu.edu (NOT @masonlive) email, and click “forgot password” to get a new password.

Create a zip file of the root directory in your assignment (please: .zip, not .tgz or .7z etc) — this is the root directory that includes the src directory and the pom.xml file. When you upload your assignment, Autolab will automatically compile and test it. You should verify that the result that Autolab generates is what you expect. Your code is built and tested in a Linux VM. Assignments that do not compile using our build script will receive a maximum of 50%. Note that we have provided ample resources for you to verify that our view of your assignment is the same as your own: you will see the result of the compilation and test execution for your assignment when you submit it.

You can submit your assignment only ten times before the deadline – so it is in your best interests to try to thoroughly understand the requirements of this assignment and make a judicious use of the autograder. For this project, no late submissions will be accepted, not even those only minutes late.

Note – You MUST be on the campus network, or connected to GMU VPN to connect to Autolab.

Decoding the output:

Note, AutoLab will run your code on the tests twice: once without RV-Predict (these are the scores used for parts 1-3), and once with RV-Predict (this is informational only). The outcomes should be the same with or without RV-Predict, but we wanted to make 100% sure that adding the tool doesn’t break your otherwise seemingly functioning code.

Questions

Please post questions on Piazza