SWE 622, Spring 2017, Homework 1, due 2/8/2017 4:00pm.

Introduction

Throughout the semester, you will build a sizable distributed system: a distributed in-memory filesystem. The goal of this project is to give you significant hands-on experience with building distributed systems, along with a tangible project that you can describe to future employers to demonstrate your (now considerable) distributed software engineering experience. We’ll do this in pieces, with a new assignment every two weeks that builds on the prior. You’re going to create an actual filesystem – so when you run CFS, it will mount a new filesystem on your computer (as if you are inserting a new hard disk), except that the contents of that filesystem are defined dynamically, by CFS (rather than being stored on a hard disk).

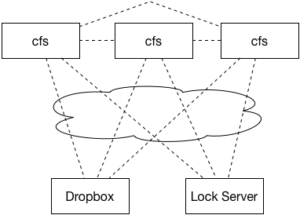

While we’re not focusing on making this a high-throughput system (for simplicity, we are implementing the filesystem driver in Java, which will inherently add some sizable performance penalty compared to implementing it in native code), you should be able to see the application of our system on the real world: often times, we build services that need to share data. If the total amount of data that we need to store is large, then we’ll probably set up some service specifically devoted to storing that data (rather than storing it all on the same machines that are performing the computation). For performance, we’ll probably want to do some caching on our clients to ensure that we don’t have to keep fetching data from the storage layer. Once we think about this caching layer a little more, it becomes somewhat more tricky in terms of managing consistency, and that’s where a lot of the fun in this project will come in. Here’s a high-level overview of the system that we are building:

Each computer that is interested in using the filesystem will run its own copy of the filesystem driver (we’ll call it the CloudFS, or CFS for short). We’ll use Dropbox’s web API to provide the permanent storage, and will implement a cache of our Dropbox contents in our cfs driver. A lock service will make sure that only a single client writes to a file at a time (HW2). Over the course of the semester, we’ll set up the cache to be distributed among multiple CFS clients, allowing them to fetch data directly from each other (HW3), distribute the lock server between the clients to increase fault tolerance (HW4), investigate fault recovery (HW5), and security and auditing (HW6).

Acknowledgements

Our CloudFS is largely inspired by the yfs lab series from MIT’s 6.824 course, developed by Robert Morris, Frans Kaashoek and the 6.824 staff.

Getting Started

This assignment totals 80 points, and will correspond to 8% of your final grade for this course.

I have provided a basic implementation of CFS that mounts a folder of your Dropbox as a disk on your local machine. For Homework 1, you will implement a simple cache in the CFS client, improving performance by decreasing the number of times that the client needs to connect to Dropbox. For this assignment, we will assume that there is only a single instance of CFS running at any time. In all of the assignments we will assume that there is no way to write to your CFS Dropbox folder except through CFS.

Our worldview looks much simpler than the one above – since we’re only considering a single client:

HW1: Implement the cache, given existing implementation of CFS, CloudFS and DropboxProvider.

We’ll provide an implementation of everything but the memory cache: that’ll be your task for this assignment.

Taking a look at the code

If you’d like to do your development in eclipse, generate an eclipse project file using the command mvn eclipse:eclipse (execute this in the fusedriver directory). There are several main classes that make up the CFS driver. Take a look at them and examine the comments.

-

CloudFS

The main class that implements the FUSE filesystem driver. TheCloudFSabstracts away a lot of the functionality of a filesystem driver (e.g. seeking through files) and instead delegates some higher level operations to theCloudProvider. You should not modify either class. -

CloudProvider

TheCloudProviderspecifies an interface for a simple file system. CloudProviders are delegating – aCloudProvidermight choose to handle a file operation itself, or if it can’t, to let another one handle it. You should not modify this class. Take a look at the functions of CloudProvider. The most important functions are get and openDir – which will get the contents of a file and read the contents of a directory (respectively). These functions are passed a pointer to a CloudPath – and function by calling setXXX on that file or directory to tell CFS information about the file or path. -

DropBoxProvider

TheDropBoxProvideris aCloudProviderimplementation that caches nothing locally, always connecting to Dropbox for requests. You should not modify this class, and you should not replicate its behavior elsewhere in the code. -

MemCacheProvider

TheMemCacheProvideris aCloudProviderthat does nothing, always delegating to theDropBoxProvider. For this homework, you should write the majority of your code in theMemCacheProviderclass. -

CloudDirectory, CloudFile, CloudPath

CloudDirectoryandCloudFilerepresent directories and files, respectively, whileCloudPathis a generic type that can represent either a file or path. You should not need to modify these classes. You should ignore the pool index.

Configuring your environment

CFS is implemented as a FUSE module. FUSE is an interface that allows user (e.g. non-kernel) programs to define a filesystem and allow the system to then mount and use that filesystem. Hence, you will need to have FUSE installed on your machine. FUSE is available for Mac OS X, or Linux (most distributions have the package libfuse-dev). In theory, you should be able to run CFS directly on your Mac or Linux computer. In practice, we will not support this. To ensure that your CFS driver works on our machines without fail, we provide an easy to use VM that has all of the dependencies for this project. We would strongly encourage you to develop (or at least test) your CFS implementation on our provided VM. You will also need to make sure that you have a JDK installed (preferably Oracle’s JDK 1.8.0 >= u60). You will also need apache maven and git. Again, our VM contains all of these dependencies.

Our VM is packaged in Vagrant. Vagrant is a frontend that wraps a VM provider (in this case, VirtualBox). First, install VirtualBox. Then, install Vagrant. The configuration for the VM is already included in the repository. After installing VirtualBox and Vagrant, run the following command from the root of your repository: SWE622CloudFS: SWE622CloudFS$ vagrant up. Let it run for a bit – the VM is about 1.3GB, so it’ll take a bit of time to download. If it errors out while downloading, just run the command again – it will pick up where it left off (there seems to be some weird issue with the GMU server). Once it finally finishes, that’s it! Now you have a VM set up and running with everything you need to run CFS.

Example output:

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

|

SWE622CloudFS$ vagrant up

Bringing machine 'default' up with 'virtualbox' provider...

==> default: Box 'javadebug' could not be found. Attempting to find and install...

default: Box Provider: virtualbox

default: Box Version: >= 0

==> default: Loading metadata for box 'http://cs.gmu.edu/~bellj/vagrant/javadebug.json'

default: URL: http://cs.gmu.edu/~bellj/vagrant/javadebug.json

==> default: Adding box 'javadebug' (v0.1.0) for provider: virtualbox

default: Downloading: http://cs.gmu.edu/~bellj/vagrant/javadebug/boxes/javadebug_0.1.2.box

==> default: Box download is resuming from prior download progress

default: Calculating and comparing box checksum...

==> default: Successfully added box 'javadebug' (v0.1.0) for 'virtualbox'!

==> default: Importing base box 'javadebug'...

==> default: Matching MAC address for NAT networking...

==> default: Checking if box 'javadebug' is up to date...

==> default: Setting the name of the VM: SWE622CloudFS_default_1484795963175_47573

==> default: Clearing any previously set network interfaces...

==> default: Preparing network interfaces based on configuration...

default: Adapter 1: nat

==> default: Forwarding ports...

default: 22 (guest) => 2222 (host) (adapter 1)

==> default: Booting VM...

==> default: Waiting for machine to boot. This may take a few minutes...

default: SSH address: 127.0.0.1:2222

default: SSH username: vagrant

default: SSH auth method: private key

==> default: Machine booted and ready!

==> default: Checking for guest additions in VM...

default: The guest additions on this VM do not match the installed version of

default: VirtualBox! In most cases this is fine, but in rare cases it can

default: prevent things such as shared folders from working properly. If you see

default: shared folder errors, please make sure the guest additions within the

default: virtual machine match the version of VirtualBox you have installed on

default: your host and reload your VM.

default:

default: Guest Additions Version: 4.2.0

default: VirtualBox Version: 5.0

==> default: Mounting shared folders...

default: /vagrant => /Users/jon/Documents/GMU/Teaching/SWE622CloudFS

|

Again, from this directory, type

|

1

2

3

4

|

SWE622CloudFS$ vagrant ssh

vagrant@precise64:~$ cd /vagrant/

vagrant@precise64:/vagrant$ ls

fusedriver README.md Vagrantfile

|

And you’ll find that you are magically connected to this VM, and that the directory /vagrant magically maps back to the directory on your own computer that your git repository lives.

You can read more about Vagrant in their documentation.

You are very, very strongly suggested to use an IDE for your development tasks – e.g. Eclipse or IntelliJ. With the VM setup that we provide, it should be pretty straightforward to run an IDE directly on your machine, and then do testing in the VM, because your project directory will be shared between the VM and your host machine.

Register a Dropbox Application

Files in CFS are stored in Dropbox. The basic implementation of CFS that you will start from already has all code needed to connect to Dropbox and mount your Dropbox as a FUSE filesystem. If you do not already have one, you must create a (free) Dropbox account. You must also register your project with Dropbox to get an API key.

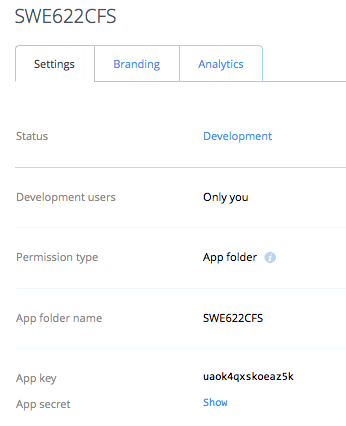

To register your application, start at the Dropbox Developer Center and answer the prompts:

- “Choose an API” -> Select “Dropbox API”

- “Choose the type of access you need” -> select “App Folder”

- “Name your app” -> Enter something like “SWE622CFS-YourName” (Dropbox will complain if you use a name that already exists)

Now, you’ll be taken to a screen showing the overview of your app. Click “Show” next to “app secret,” and then note down your App key and App secret.

Configure CFS

Configure CFS

Get your basic implementation of CFS by creating your GitHub HW 1 Repository, and then cloning that repository to your local machine. If you’d like to do your development in eclipse, generate an eclipse project file using the command mvn eclipse:eclipse (execute this in the fusedriver directory). Copy the file fusedriver/src/main/resources/default.properties to fusedriver/src/main/resources/config.properties. Update the file based on the values that you noted from registering your app in the previous step (you must update all 3: key, secret, and app name). Example:

|

1

2

3

|

DROPBOX_KEY=kljsakdlfjl34jkl

DROPBOX_SECRET=sdf4ikjklf8

DROPBOX_APP_NAME=SWE622CloudFS

|

Now, you’ll compile the code and run it to link it to your Dropbox. In the fusedriver directory, run mvn -DskipTests=true package

mvn package will run test cases against CFS before generating the final package. If you do NOT want these tests to run, specify the -DskipTests=true argument. The very first time that you connect to Dropbox, you should do it manually (e.g. not through a test). The tests will not be particularly fast (especially without this assignment implemented) so we recommend that you do not run them until you are ready to. |

1 |

vagrant@precise64:/vagrant/fusedriver$ mvn -DskipTests=true package

|

[email protected]:/vagrant/fusedriver$ mvn -DskipTests=true package [INFO] Scanning for projects... [WARNING] [WARNING] Some problems were encountered while building the effective model for edu.gmu.swe622:CloudFS:jar:0.0.1-SNAPSHOT [WARNING] 'build.plugins.plugin.version' for org.apache.maven.plugins:maven-jar-plugin is missing. @ line 18, column 12 [WARNING] [WARNING] It is highly recommended to fix these problems because they threaten the stability of your build. [WARNING] [WARNING] For this reason, future Maven versions might no longer support building such malformed projects. [WARNING] [INFO] [INFO] ------------------------------------------------------------------------ [INFO] Building CloudFS 0.0.1-SNAPSHOT [INFO] ------------------------------------------------------------------------ [INFO] [INFO] --- maven-resources-plugin:2.6:resources (default-resources) @ CloudFS --- [WARNING] Using platform encoding (UTF-8 actually) to copy filtered resources, i.e. build is platform dependent! [INFO] Copying 2 resources [INFO] [INFO] --- maven-compiler-plugin:3.1:compile (default-compile) @ CloudFS --- [INFO] Nothing to compile - all classes are up to date [INFO] [INFO] --- maven-resources-plugin:2.6:testResources (default-testResources) @ CloudFS --- [WARNING] Using platform encoding (UTF-8 actually) to copy filtered resources, i.e. build is platform dependent! [INFO] skip non existing resourceDirectory /vagrant/fusedriver/src/test/resources [INFO] [INFO] --- maven-compiler-plugin:3.1:testCompile (default-testCompile) @ CloudFS --- [INFO] Nothing to compile - all classes are up to date [INFO] [INFO] --- maven-surefire-plugin:2.17:test (default-test) @ CloudFS --- [INFO] Tests are skipped. [INFO] [INFO] --- maven-jar-plugin:2.4:jar (default-jar) @ CloudFS --- [INFO] [INFO] --- maven-shade-plugin:2.3:shade (default) @ CloudFS --- [INFO] Including fuse-jna:fuse-jna:jar:1.0.3 in the shaded jar. [INFO] Including net.java.dev.jna:jna:jar:4.1.0 in the shaded jar. [INFO] Including org.codehaus.groovy:groovy-all:jar:2.1.5 in the shaded jar. [INFO] Including com.dropbox.core:dropbox-core-sdk:jar:1.7.7 in the shaded jar. [INFO] Including com.fasterxml.jackson.core:jackson-core:jar:2.4.0-rc3 in the shaded jar. [WARNING] jackson-core-2.4.0-rc3.jar, CloudFS-0.0.1-SNAPSHOT.jar define 84 overlappping classes: [WARNING] - com.fasterxml.jackson.core.sym.BytesToNameCanonicalizer$Bucket [WARNING] - com.fasterxml.jackson.core.FormatSchema [WARNING] - com.fasterxml.jackson.core.io.InputDecorator [WARNING] - com.fasterxml.jackson.core.sym.BytesToNameCanonicalizer [WARNING] - com.fasterxml.jackson.core.JsonGenerator$Feature [WARNING] - com.fasterxml.jackson.core.io.SegmentedStringWriter [WARNING] - com.fasterxml.jackson.core.type.ResolvedType [WARNING] - com.fasterxml.jackson.core.TreeNode [WARNING] - com.fasterxml.jackson.core.sym.Name [WARNING] - com.fasterxml.jackson.core.util.JsonGeneratorDelegate [WARNING] - 74 more... [WARNING] dropbox-core-sdk-1.7.7.jar, CloudFS-0.0.1-SNAPSHOT.jar define 161 overlappping classes: [WARNING] - com.dropbox.core.DbxClient$14 [WARNING] - com.dropbox.core.DbxOAuth1Upgrader$3 [WARNING] - com.dropbox.core.DbxEntry$WithChildren$2 [WARNING] - com.dropbox.core.DbxException$RetryLater [WARNING] - com.dropbox.core.DbxClient$2 [WARNING] - com.dropbox.core.util.Collector$NullSkipper [WARNING] - com.dropbox.core.json.JsonReader [WARNING] - com.dropbox.core.DbxClient$17 [WARNING] - com.dropbox.core.json.JsonWriter [WARNING] - com.dropbox.core.DbxClient$ChunkedUploadState [WARNING] - 151 more... [WARNING] CloudFS-0.0.1-SNAPSHOT.jar, jna-4.1.0.jar define 104 overlappping classes: [WARNING] - com.sun.jna.Callback$UncaughtExceptionHandler [WARNING] - com.sun.jna.Native$4 [WARNING] - com.sun.jna.ptr.ShortByReference [WARNING] - com.sun.jna.Pointer [WARNING] - com.sun.jna.Structure$FFIType$size_t [WARNING] - com.sun.jna.Memory$SharedMemory [WARNING] - com.sun.jna.Native$7 [WARNING] - com.sun.jna.Native$ffi_callback [WARNING] - com.sun.jna.Native$Buffers [WARNING] - com.sun.jna.TypeMapper [WARNING] - 94 more... [WARNING] groovy-all-2.1.5.jar, CloudFS-0.0.1-SNAPSHOT.jar define 3924 overlappping classes: [WARNING] - org.codehaus.groovy.runtime.dgm$486 [WARNING] - groovyjarjarasm.asm.commons.RemappingClassAdapter [WARNING] - org.codehaus.groovy.runtime.dgm$90 [WARNING] - groovy.swing.factory.DialogFactory [WARNING] - groovyjarjarantlr.AlternativeBlock [WARNING] - groovy.text.XmlTemplateEngine$XmlTemplate [WARNING] - groovy.ui.OutputTransforms$_loadOutputTransforms_closure4 [WARNING] - groovy.swing.LookAndFeelHelper$_closure6 [WARNING] - groovyjarjarantlr.debug.InputBufferEvent [WARNING] - groovyjarjarasm.asm.Attribute [WARNING] - 3914 more... [WARNING] fuse-jna-1.0.3.jar, CloudFS-0.0.1-SNAPSHOT.jar define 226 overlappping classes: [WARNING] - net.fusejna.StructFuseFileInfo$ByReference [WARNING] - net.fusejna.StructFuseOperations$7 [WARNING] - net.fusejna.StructStat$X86_64$ByReference [WARNING] - net.fusejna.StructFlock$NotFreeBSD$ByReference [WARNING] - net.fusejna.LoggedFuseFilesystem$8 [WARNING] - net.fusejna.LoggedFuseFilesystem$12 [WARNING] - net.fusejna.FuseJna$1 [WARNING] - net.fusejna.StructStat$StatWrapper [WARNING] - net.fusejna.StructFlock$NotFreeBSD [WARNING] - net.fusejna.LoggedFuseFilesystem$32 [WARNING] - 216 more... [WARNING] maven-shade-plugin has detected that some .class files [WARNING] are present in two or more JARs. When this happens, only [WARNING] one single version of the class is copied in the uberjar. [WARNING] Usually this is not harmful and you can skeep these [WARNING] warnings, otherwise try to manually exclude artifacts [WARNING] based on mvn dependency:tree -Ddetail=true and the above [WARNING] output [WARNING] See http://docs.codehaus.org/display/MAVENUSER/Shade+Plugin [INFO] Replacing original artifact with shaded artifact. [INFO] Replacing /vagrant/fusedriver/target/CloudFS-0.0.1-SNAPSHOT.jar with /home/ubuntu/622cloudfs/fusedriver/target/CloudFS-0.0.1-SNAPSHOT-shaded.jar [INFO] Dependency-reduced POM written at: /vagrant/fusedriver/dependency-reduced-pom.xml [INFO] Dependency-reduced POM written at: /vagrant/fusedriver/dependency-reduced-pom.xml [INFO] ------------------------------------------------------------------------ [INFO] BUILD SUCCESS [INFO] ------------------------------------------------------------------------ [INFO] Total time: 3.739 s [INFO] Finished at: 2017-01-12T15:24:47-05:00 [INFO] Final Memory: 11M/44M [INFO] ------------------------------------------------------------------------

When we run CFS, it will take a single argument: the empty directory to be used as the mount point for the CFS.

|

1

2

|

vagrant@precise64:/vagrant/fusedriver$ java -jar target/CloudFS-0.0.1-SNAPSHOT.jar

Usage: CloudFS mountpoint {hello}

|

Let’s try running it:

|

1

2

3

4

5

6

|

vagrant@precise64:/vagrant/fusedriver$ mkdir cfsmnt

vagrant@precise64:/vagrant/fusedriver$ java -jar target/CloudFS-0.0.1-SNAPSHOT.jar cfsmnt

Logging in to dropbox for the first time...

1. Go to: https://www.dropbox.com/1/oauth2/authorize?locale=en_US&client_id=s3ilyg3nllfa4ud&response_type=code

2. Click "Allow" (you might have to log in first)

3. Copy the authorization code and paste it here, then hit return.

|

Follow the instructions to connect CFS to your Dropbox account. Visit the URL specified (your own app will have a different URL from the one shown here), then click “Allow,” then copy the code, and paste it back in the window running CFS, then hit return. You will only need to perform this step once (per computer). After pasting the code in, you should get something like this:

|

1

2

3

4

5

6

7

8

|

vagrant@precise64:/vagrant/fusedriver$ java -jar target/CloudFS-0.0.1-SNAPSHOT.jar cfsmnt

Logging in to dropbox for the first time...

1. Go to: https://www.dropbox.com/1/oauth2/authorize?locale=en_US&client_id=s3ilyg3nllfa4ud&response_type=code

2. Click "Allow" (you might have to log in first)

3. Copy the authorization code and paste it here, then hit return.

neiOdMqHJjMAAAAAAAAjcnSKN7bo512VxyG-YZ7QdZw

Got token: neiOdMqHJjMAAAAAAAAjc0vxQZlIXyoJ8ZdA9XpXtAgEiWUzRbrEsugZxH86D5bN

Linked account: Jon Bell

|

In a new shell window, try to cd into your cfsmnt folder, and try creating a file/folder. You’ll notice that it’s not very fast. This is why we need a local cache.

Grading details and the nasty bits

This assignment totals 80 points, and will be worth 8% of your cumulative, final grade. The grading will be broken down into the three parts as listed below.

mvn package. Do not modify the pom.xml. Assignments that don’t compile will immediately lose 50% credit. There will be NO exceptions to this rule, for any reason, including that some files were missing from your submission because you forgot to track them with git. It is purely your responsibility to ensure that your submission is correct and compiles. For each part, add a short description in the README.md in your repository describing your high level design decisions and challenges that you faced implementing that part.

Your code should have sufficient comments that I can read and understand it.

Part 1: HelloWorldFS (5 points)

Before building up the memory caching layer, we’re going to do a short exercise to make sure that you can get acquainted with the interfaces provided. Specifically, you’ll create a CloudProvider that does NOTHING with Dropbox, and instead, just creates a simple, static, read-only file system. Your HelloWorldProvider should represent a filesystem with only a single file, “hello” (in the root directory), which contains the string “hello world.” You must implement this filesystem driver in the HelloWorldProvider class. To get the HelloWorldFS when you run CFS, add the argument “hello.” Example:

|

1

2

3

4

5

6

7

8

|

vagrant@precise64:/vagrant$ java -jar target/CloudFS-0.0.1-SNAPSHOT.jar cfsmnt/ hello

vagrant@precise64:/vagrant$ ls -lash cfsmnt/

total 8

0 drwxrwxrwx 1 jon staff 0B Jan 12 20:35 ./

0 drwxr-xr-x 20 jon staff 680B Jan 12 20:35 ../

8 -rwxrwxrwx 1 jon staff 12B Dec 31 1969 hello*

vagrant@precise64:/vagrant$ cat cfsmnt/hello

hello world

|

Remember that YOU are the one defining what the files are in this case – there is nowhere that the “hello” file will actually be stored on your computer. Instead, the CFS will create its own filesystem (under, in this case, cfsmnt) and then it is the job of your HelloWorldProvider to make it APPEAR as though there is a single file in the root directory of THAT filesystem. Take a look at the CloudFile and CloudDirectory classes: the goal with the two functions in HelloWorldProvider is to modify the passed CloudFile and CloudDirectory to make the “hello” file appear (with its contents). You should call the various setXXX (or add) methods on the provided file/directory to make the “hello” file appear.

(Added 2/2/17): Implementing a CloudProvider might seem non-intuitive if you are not used to writing callback-oriented programs. When you write a CloudProvider, you aren’t necessarily writing some code that will be called immediately: you are defining some functionality that will occur, when something else happens. In this case, whatever you put in openDir will be called by the operating system, when you type ls cfsmnt. And rather than return some value, you change the state of the system by calling methods on one of the parameters to modify it. In this case, in the openDir method, you’ll want to use CloudDirectory.add(new CloudFile...) to the / directory when you’re asked about it. Similarly, in get, you’ll want to fill the passed CloudFile object with information about what the /hello file contains. In this case, you’ll need to set its contents (which should be the bytes of the string “hello world”), and set its length (which you can calculate yourself, or note from the listing above that it’s 12).

Hints:

You only need to implement TWO of the methods of CloudProvider to get this to work – the two that are already stubbed in the HelloWorldProvider.

Part 2: Add in-memory caching (45 points)

Now that you’ve figured out the basics of the CloudProvider interface, lets revisit the default configuration (with the DropBoxProvider). You have probably noticed that the Dropbox interface is really slow: for every single filesystem operation, we connect to Dropbox. For this part of the assignment, you’ll add a local cache to these operations. Assume that there is exactly one CFS client running at a time, and there are no other ways to access its part of Dropbox. Also, assume that the in-memory storage space of your CFS client is at least as large as your Dropbox storage (i.e., no need to worry about running out of cache space). Hence, it’s reasonable for your CFS cache to cache everything that it hears back from Dropbox. Implement such a cache in MemCacheProvider, so that:

- If the request has been made before, it’s served from cache

- If the request has not been made before, it’s made to the parent provider (which in this case will be the Dropbox provider). For instance, in pseudo-code:

123456@Overridepublic void get(String path, CloudFile getTo, boolean withContent) throws IOException, FileNotFoundException {if(!inCache(path))super.get(path, getTo, withContent);serveFromCache(path);}

Make sure to consider all of the possible operations of CloudProvider, and cache as appropriate. For instance, when a new file is created, while this will require that the new file is uploaded to Dropbox, future reads of that file can occur without requesting that same file back from Dropbox. You may not use 3rd party code to implement the cache – use only the code provided and classes available in the Java API.

Hints: You should probably consider structuring your cache in a way similar to how directories are structured (e.g. as a tree). This will probably make it easier to reason about how things are updated and changed.

You can find several simple test cases for your memory cache in the src/test directory. They’ll execute automatically when you type mvn package or mvn test. Note that these are by no means a complete test suite, but should serve as a very basic smoke test to tell you that you are on the right path. You may find it useful to write more test cases to help make sure that your implementation is complete and correct. However, your test cases will not be graded.

One way to organize your in-memory cache is to simply use instances of CloudFile and CloudDirectory, and keep them nested in the same way as they are actually nested on the filesystem. For example, consider a file stored at /my/folder/file. When get is called, you’ll get passed that path: /my/folder/file. How could you find that file? You can break down the path into its individual components: we know that the file name is file, and that it’s stored in the folder folder, in the folder my, in the folder /. In fact, we know that ALL files are going to be, at some point, stored in the path /. So, we might use an instance CloudDirectory to represent the root (/). Then we know that first we need to check our cache to find /, then to find my in /, then to find folder in my then to find file in folder. Here’s some pseudo-java-code that uses a nice recursive algorithm to do this:

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

|

CloudDirectory rootDir; //Set this up somehow, perhaps when your app loads

public CloudPath getFromCacheOrDropbox(Path p)

{

if(p.toString().equals("/"))

return rootDir;

CloudDirectory parentDir = (CloudDirectory) getFromCacheOrDropbox(p.getParent());

//Now we know the parent directory of whatever we're looking for

//Just find the requested directory in that one.

String str = p.getFileName().toString();

for(CloudPath cp : parentDir.listFiles())

if(cp.getName().equals(str))

return cp;

//Get the CloudFile/CloudDirectory you're looking for from Dropbox, then add it to parent

return what you just got from cloud;

}

|

Part 3: Cache Consistency (30 points)

Even without considering multiple CFS processes running at a time, there is still a pesky consistency problem with your cache: CFS itself is multi-threaded. That is, multiple processes running on your computer might access the CFS filesystem at the same time, and due to the multithreading within FUSE, multiple CloudProvider calls can occur simultaneously. Hence, you must add thread-safety to your cache. Here are some considerations for how you decide to perform locking in your cache:

- Before implementing things, it might help to plan out your locking strategy. We encourage you to write this down in your README.md file.

- One obvious solution is to use a single lock before touching the cache at all. Hence, it would be impossible for any inconsistency, and in effect, CFS would become totally sequential (single-threaded). This is far too aggressive: there could be no parallelism. This solution would receive 0 credit.

- While a directory is being listed (i.e. via get), its contents must not change.

- While a file is being read, it must not be written. While a file is being read, other threads can read it as well. Only one thread can write to a file at a time.

- You should not synchronize on

CloudPathinstances. If you synchronize on a path, use the Lock provided byCloudPath.getLock().

Hint: If you use the suggested mechanism above for finding and caching CloudPaths, it should be relatively straightforward to identify which parent directories need to be locked when you access one of their children.

Submission

Perform all of your work in your homework-1 git repository. Commit and push your assignment. Once you are ready to submit, create a release, tagged “hw1.” Unless you want to submit a different version of your code, leave it at “master” to release the most recent code that you’ve pushed to GitHub. Make sure that your name is specified somewhere in the release notes. The time that you create your release will be the time used to judge that you have submitted by the deadline. This is NOT the time that you push your code.

Make sure that your released code includes all of your files and builds properly. You can do this by clicking to download the archive, and inspecting/trying to build it on your own machine/vm. There is no need to submit binaries/jar files (the target/ directory is purposely ignored from git).

If you want to resubmit before the deadline: Simply create a new release, we will automatically use the last release you published. If you submitted before the deadline, then decide to make a new release within 24 hours of the deadline, we will automatically grade your late submission, and subtract 10%. Any releases created more than 24 hours past the deadline will be ignored.

Change log:

1/25/17: Clarified how to get a private GitHub repository. Clarified the distinction between filesystems. Clarified that Dropbox app name should NOT be the example name. Gave a few more hints for the HelloWorldFS.